TikTok is failing to contain violent beheading content on its platform

From true crime deepfakes to reuploaded violent content, TikTok is flooded with videos publicizing the beheading of a federal employee

Written by Abbie Richards

Published

Content warning: This piece features graphic imagery and descriptions of violent events.

After Justin Mohn allegedly killed and beheaded his father on January 30, he uploaded a video to YouTube in which he holds the severed head aloft while spouting right-wing conspiracy theories. The video remained on YouTube for over five hours, garnering roughly 5,000 views. Now, copies of Mohn’s violent propaganda and related deepfakes have garnered millions of views on TikTok. Some of these videos appear to violate TikTok’s policies.

TikTok’s community guidelines prohibit “gory, gruesome, disturbing, or extremely violent content” which includes “body parts that are dismembered, mutilated, charred, burned, or severely injured.” The community guidelines also state that TikTok does not allow “the presence of violent and hateful organizations or individuals” on its platform.

Circulating violent extremist content

Media Matters identified TikTok videos that contained portions of Mohn’s tirade with 737,000 views, 1.1 million views, 692,000 views, and 2.8 million views. Media Matters also identified dozens of videos which featured still images of Mohn holding his father’s severed head with the head blurred out. One video with 1.9 million views featured a still image of Mohn holding the severed head, which, while partially out of frame, was unblurred and extremely graphic.

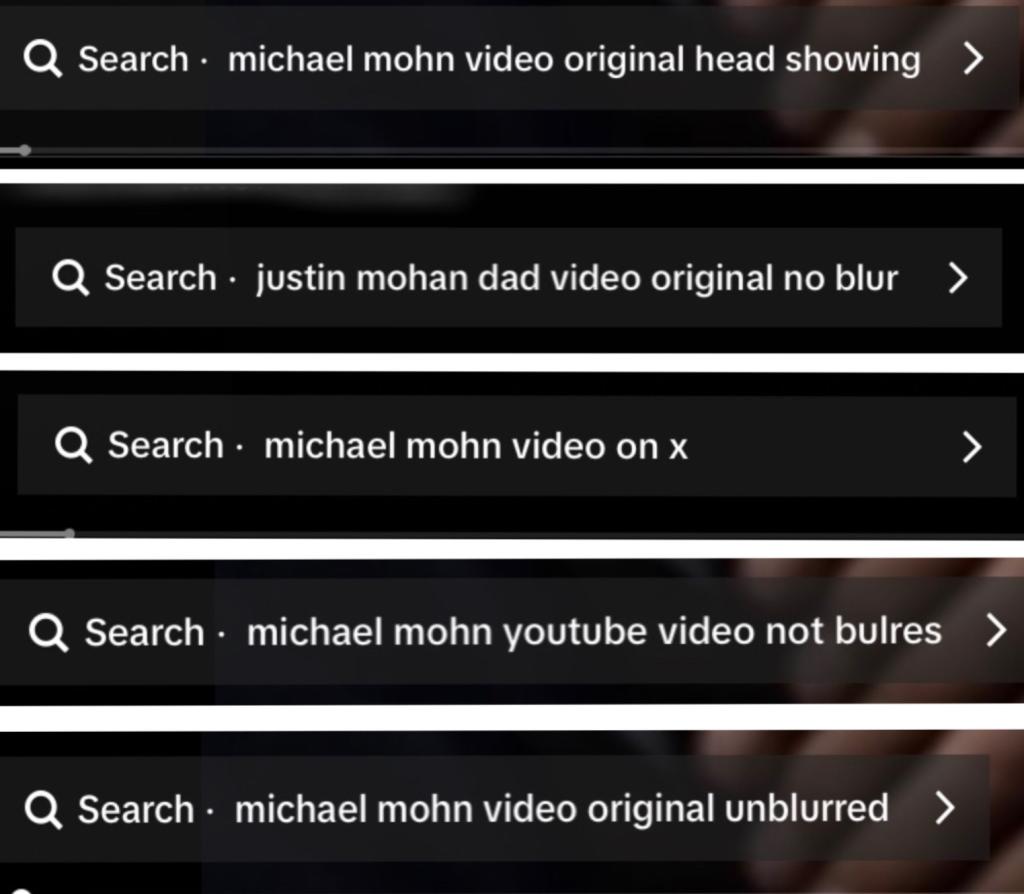

TikTok’s suggested search feature, which recommends related searches based on other users’ activity after engaging with a video, has also been recommending searches for unblurred images of Michael Mohn’s severed head. It seems that so many users were searching for the unblurred graphic content after watching the video that the platform’s automation began prompting the search to other viewers, even though this content would seemingly violate TikTok’s terms of service. Following these suggested search prompts led Media Matters to the reuploaded videos of Mohn’s original.

TikTok suggested the search prompt “justin mohan dad video original no blur" under at least two videos featuring a reuploaded clip of Mohn's YouTube screed. Another video, which featured a still image of Mohn holding up a blurred severed head, suggested users search for “michael mohn video original unblurred.” Later, the prompt under the same video read “michael mohn video original head showing.”

Some TikTok users have complained about being exposed to the graphic content. In one video, a user says: “He pulled out his father’s head and I literally just saw that on TikTok. … I didn’t go looking for the video. I did not want to see the video. Literally, why is it showing up on my For You page?”

Deepfake Dystopia

Michael Mohn deepfake videos have also seemingly emerged in the wake of the alleged killing. These videos are part of a larger sea of extremely popular TikTok videos: deepfakes of true crime victims. Media Matters identified at least 16 uploads of an apparently artificial intelligence-generated Michael Mohn recounting the killing.

One video with 5 million views, which was uploaded just three days after Mohn’s alleged killing, seemingly uses an AI-generated image, AI-generated animation, and an AI-generated voice to recount the violent attack. In it, a depiction of Michael Mohn describes his own killing from a first-person perspective over a royalty-free background track called “Suspense, horror, piano and music box.”

The video gets a key fact about Mohn’s death wrong, claiming that he was killed with a knife when officials say he was actually shot to death. It ends by asking viewers, “In your opinion, should my son go to prison?”

The video, which is 1 minute and 3 seconds long, is just long enough to qualify for TikTok’s Creativity Program Beta, the program designed to compensate creators for content. The account appears to meet most of the criteria for the Creativity Program, and the video has similarities with other monetized TikTok content, though it is unclear whether this specific video is monetized.

The account that posted the video, called “detectivefile,” shares apparently AI-generated horror content with its audience of nearly a million followers, typically featuring deceased or imprisoned people that have been animated to recount their murders, deaths, and/or abuse. In a video posted the day before the Michael Mohn video was shared, a seemingly AI-generated 5-year-old covered in cocaine recounts taking the drug and shooting his little brother. Media Matters could not identify any videos under 60 seconds long from the account, meaning all videos would likely be eligible for compensation from the Creativity Program.

The account’s bio reads, “Want to generate 10k per Month like me?” and links to the purchasing page for a course claiming that it will teach customers how to make money online.

TikTok’s Creativity Program has been financially rewarding sensationalist and conspiracy theory content, and it appears that it may also be rewarding disturbing true crime content. While TikTok should be compensating creators, the platform needs to ensure that it is not financially incentivizing violent, sensationalist, or inaccurate content — especially now that AI has made creating this type of content easier than ever.

Furthermore, as AI has become increasingly prevalent online, social media platforms like TikTok must evolve their policies to account for the new harms it can cause. According to TikTok’s community guidelines, the platform does “not allow synthetic media that contains the likeness of any real private figure.” Perhaps if someone becomes a public figure only in death, they should not be considered a viable option for AI reanimation.